Why Word Count is a Lie | The Neural Search Shift

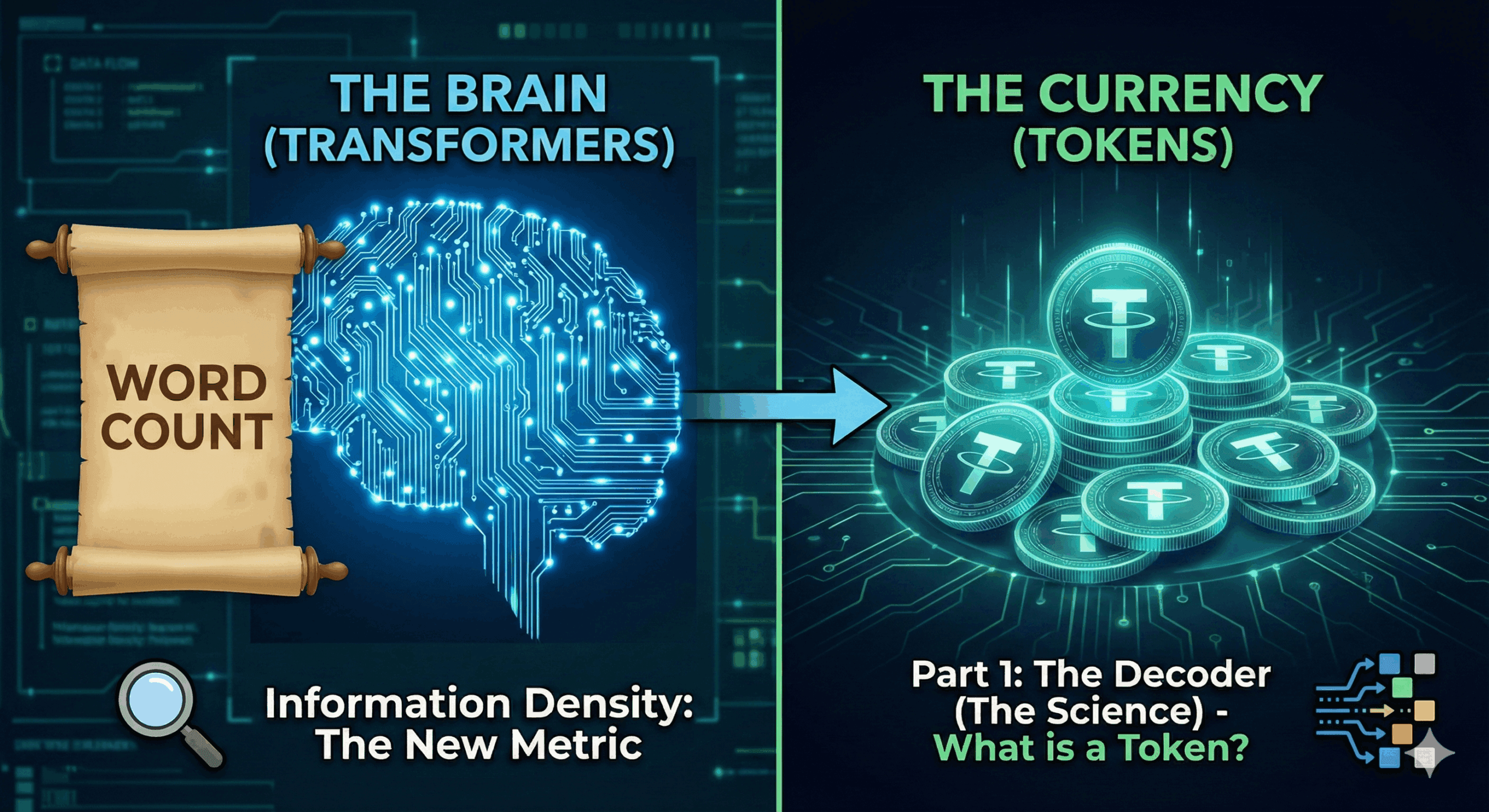

We are moving from the “Brain” (Transformers) to the “Currency” (Tokens). This episode challenges one of the oldest metrics in content marketing: the Word Count.

For years, content contracts were signed based on “word count.” We equated length with depth. But Large Language Models (LLMs) do not read words, and they do not care about length. They operate on a currency called Tokens. In this episode, we explore the economics of AI processing and why “Information Density” is the only metric that matters in 2026.

Part 1: The Decoder (The Science)

What is a Token?

When you feed a blog post into ChatGPT, Gemini, or Claude, the first thing the engine does is chop it up. It doesn’t see “words” like you do. It sees Tokens.

1. The Mechanics of Tokenization A token is a chunk of characters that frequently appear together.

- Simple words like “apple” or “the” are usually 1 token.

- Complex words like “Generative” might be split into 2 or 3 tokens (e.g., “Gener” + “ative”).

- Rough Math: 1,000 words is approximately 1,300 tokens.

2. The “Context Window” (The Budget) Every LLM has a “Context Window”—a limit on how much information it can hold in its short-term memory at one time.

- Think of the Context Window as a budget.

- If a model has an 8,000-token limit, and you feed it a 3,000-token article full of fluff, you have just consumed nearly 40% of its budget with low-value data.

3. The Cost of Compute For an AI, processing text costs energy (GPU cycles).

- High Entropy (High Value): Unique insights, data, and logic. The AI “learns” from this.

- Low Entropy (Low Value): Repetitive introspection, long-winded intros, and keyword stuffing.

- The Science: When an AI processes “Low Entropy” text (fluff), its “Attention Mechanism” (discussed in Ep 1) dilutes. It struggles to find the “needle” of your insight because you buried it in a “haystack” of wasted tokens.

Part 2: The Strategist (The Playbook)

Optimizing for Information Density

In the Old SEO world, we wrote 2,500-word guides to signal “authority” to Google. We added fluff to increase “Time on Page.” In the GenAI world, brevity is power. If you can say it in 500 tokens, but you take 1,500 tokens, you are signaling to the AI that your content is inefficient.

1. The New Metric: Information Density You need to shift your KPI from “Length” to “Density.”

- Formula: Total Unique Insights / Total Tokens Used.

- The Goal: Maximizing the ratio of facts per sentence.

- Example:

- Low Density: “In the rapidly evolving world of digital marketing, it is important to note that customer retention is key…” (20 tokens, 0 new facts).

- High Density: “Customer retention costs 5x less than acquisition.” (9 tokens, 1 hard fact).

2. The “Needle in a Haystack” Problem Research has shown that LLMs struggle to retrieve information buried in the middle of long, fluffy documents (The “Lost in the Middle” phenomenon).

- The Strategy: Do not bury your answers. If you are answering “How to fix a leaky faucet,” do not start with the history of plumbing.

- Action: Structure your content like a Pyramid.

- Top: The Direct Answer (The Snippet).

- Middle: The Supporting Data.

- Bottom: The Context/Nuance.

- This ensures the AI grabs the most valuable tokens first.

3. Stop “Stretching” Content Writers were trained to “expand” on ideas to hit word counts. You must now train them to “contract” ideas to hit Insight Goals.

- The Strategy: Use bullet points and tables.

- Why: Tables are token-efficient. They present structured relationships between data points without the “connective tissue” of fluffy sentences. AI models love tables because the relationship between Row A and Column B is mathematically clear.

ContentXir Intelligence

The Efficiency Score At ContentXir, we view content ingestion as a “bandwidth” issue. Search engines have limited bandwidth to crawl and index the web. GenAI engines have limited compute to process it.

If your site is “bloated” with low-density content, you are essentially spamming the AI’s context window. The models will prioritize sources that give them the answer faster and with less compute.

Next Up on S01E03: Embeddings & Vector Space

Related Insights

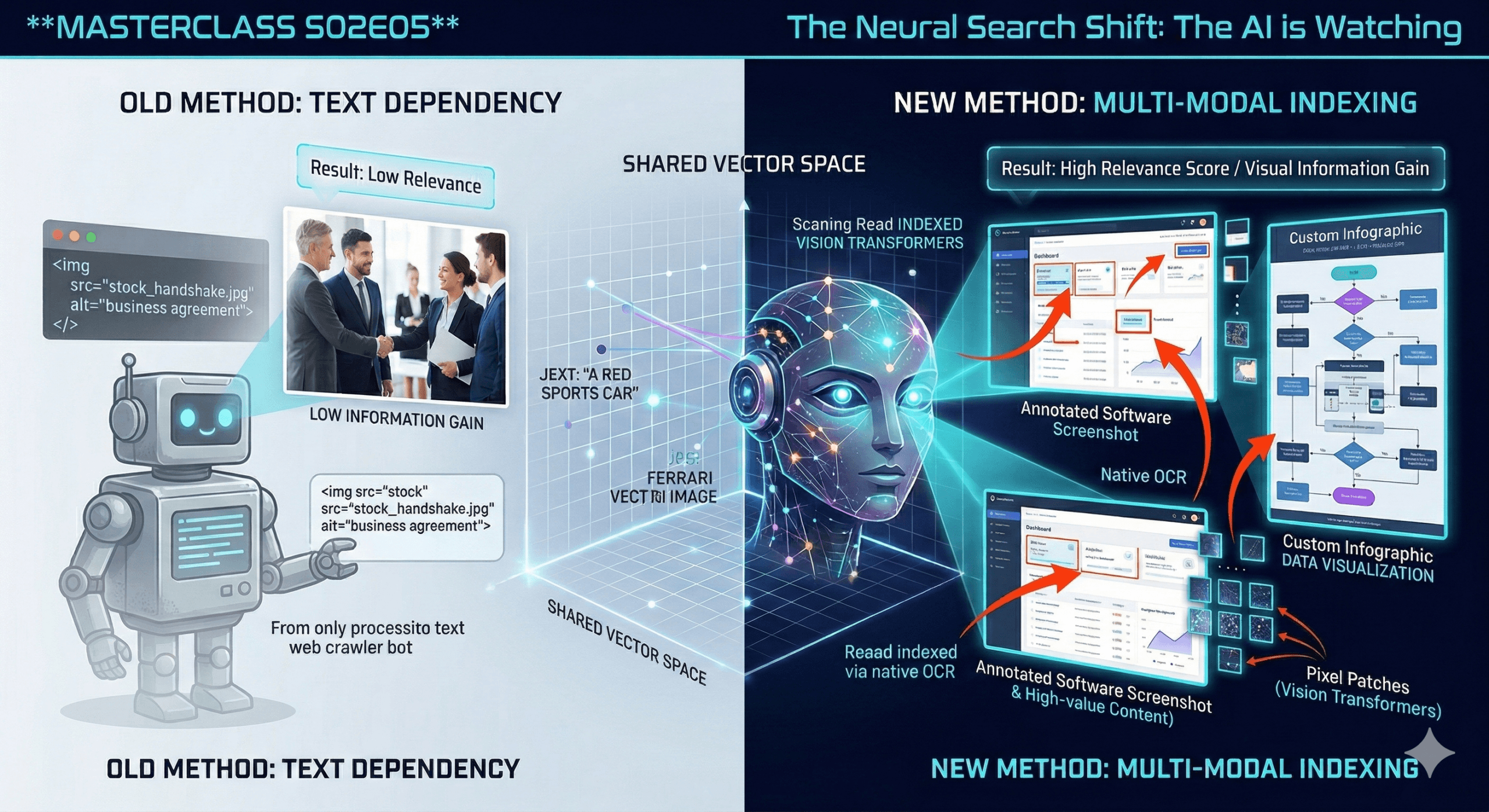

Visual Search & Multi-Modal Indexing | see how the AI is watching you

We are moving beyond text. The most profound shift in AI over the last 12 months isn’t just that it got smarter at reading—it’s that it learned to see. If…

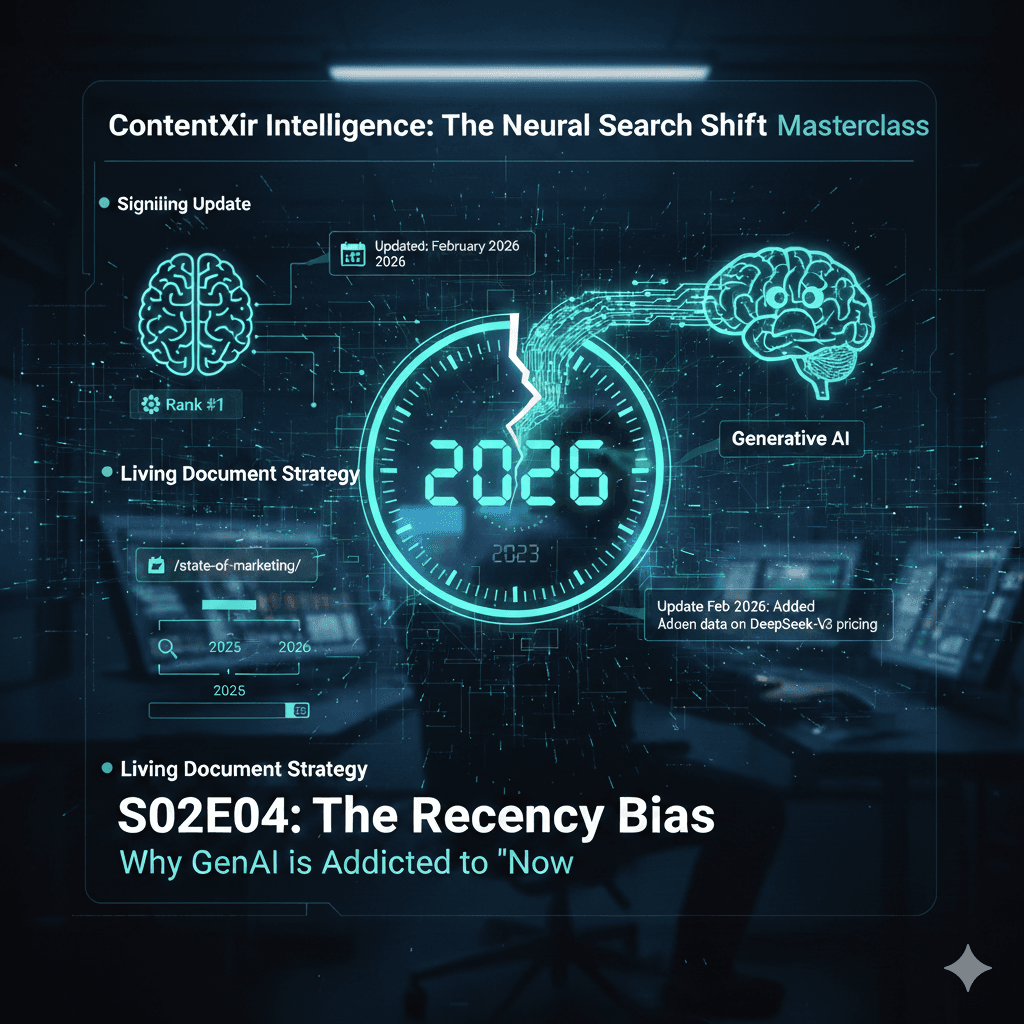

The Recency Bias | Why GenAI is Addicted to “Now”

We have discussed how to be cited. Now we discuss when to be cited. Generative AI has a massive insecurity: it knows its internal memory is outdated. Therefore, it over-corrects…

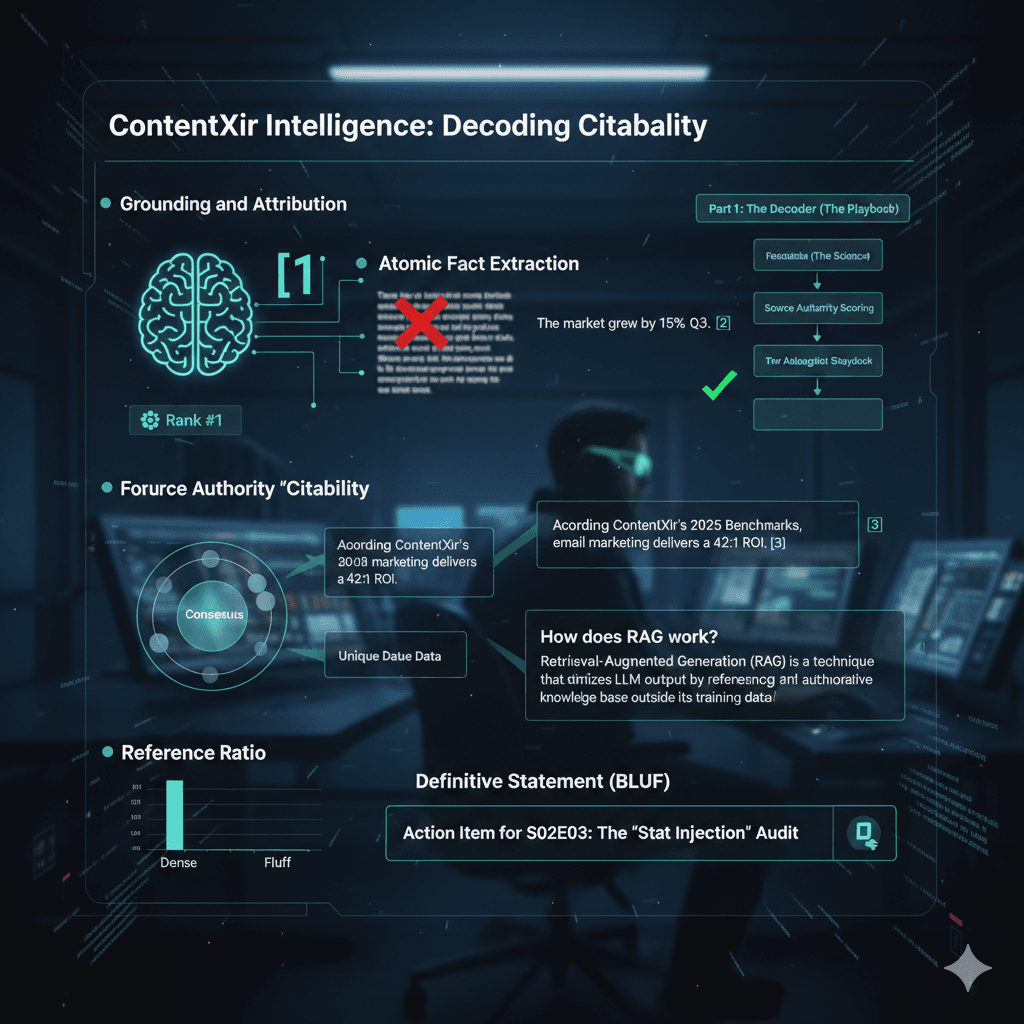

How to win the AIO | Crack the mystery of getting citation in generative search engine result

We are tackling the new currency of the web. Traffic is no longer the primary metric; attribution is. If the AI uses your data to answer a user but doesn’t…