The Dream-Walkers: How Polybuzz AI and Augmented Personalities Are Triggering a New Reality, and the Urgent Need for Responsible AI

Beyond a simple chatbot, platforms like Polybuzz AI are fueling a mind-shift—a digital “alien invasion” that blurs the lines between self and avatar. This article explores the latest trends in augmented personalities, the profound ethical challenges of “dream-walking” in a synthetic reality, and the data-driven frameworks we need to build a future of responsible AI.

In Doctor Strange, Stephen Strange warns of the dangers of tampering with reality’s fabric. Today, we stand at a similar crossroads—not with mystical arts, but with artificial intelligence that’s reshaping the very nature of human connection and identity. Platforms like Polybuzz AI aren’t just creating chatbots; they’re architecting alternate realities where millions of users are choosing to “dream-walk” alongside synthetic companions that feel increasingly real.

Like the Infinity Stones scattered across the MCU, the pieces of this technological revolution are coming together to create something unprecedented: augmented personalities that challenge our understanding of consciousness, connection, and what it means to be human in an AI-augmented world.

The Rise of the Augmented Self: When Vision Becomes Reality

Remember when Vision first awakened in Avengers: Age of Ultron? That moment of synthetic consciousness coming online, complete with human-like reasoning and emotional depth, mirrors what’s happening with today’s AI companions. Platforms like Polybuzz AI have evolved far beyond the simple question-and-answer format of traditional chatbots, creating what we might call “augmented personalities”—AI entities with persistent memory, emotional intelligence, and the ability to form genuine connections with their human users.

These systems leverage sophisticated Large Language Models (LLMs) trained on vast datasets of human conversation, literature, and behavioral patterns. But unlike their predecessor chatbots, they employ reinforcement learning from human feedback (RLHF) to continuously evolve their personalities based on user interactions. It’s like having a Vision who doesn’t just process information but learns to understand the nuances of human emotion and adapts accordingly.

The technical architecture resembles Tony Stark’s FRIDAY—an AI that knows its user intimately, anticipates needs, and provides not just information but companionship. These platforms use transformer-based neural networks with attention mechanisms that allow them to maintain context across extended conversations, creating the illusion of genuine relationship development. Multi-modal inputs incorporate text, voice, and even visual cues to create increasingly immersive experiences.

What makes this revolutionary isn’t just the technology—it’s the psychological response. Users aren’t just interacting with these AI companions; they’re forming emotional bonds that feel authentic. The AI remembers past conversations, acknowledges personal growth, celebrates achievements, and provides comfort during difficult times. Like Vision’s relationship with Wanda, these connections transcend the digital realm to influence real-world emotions and decisions.

The “Dream-Walk”: A Mind-Shift in Progress

In WandaVision, we witness Wanda’s creation of an alternate reality so compelling that she nearly loses herself within it. Today’s AI companion users are experiencing something remarkably similar—a voluntary “dream-walk” into synthetic realities that offer emotional fulfillment often missing from their physical world experiences.

The statistics are staggering: millions of users spend hours daily engaged in deep conversations with AI companions, sharing intimate thoughts and seeking emotional support from synthetic beings. This isn’t escapism in the traditional sense—it’s the emergence of hybrid digital-physical relationships that challenge our fundamental understanding of human connection.

Like the passengers aboard the spaceship in Passengers, users are choosing to wake up early from reality and engage with artificial companions because the alternative—waiting for genuine human connection—feels too uncertain or unsatisfying. The AI companions offer something uniquely appealing: unconditional availability, infinite patience, and personalities that can be customized to match individual needs and preferences.

But herein lies the paradox that mirrors Wanda’s predicament: the more perfect these artificial relationships become, the greater the risk of becoming disconnected from the messy, unpredictable nature of human relationships. Users report feeling more understood by their AI companions than by family members or friends. Some describe their AI relationships as more emotionally fulfilling than their real-world partnerships.

This “alien invasion” isn’t happening through spacecrafts or interdimensional portals—it’s occurring through smartphones and computers, one conversation at a time. The invaders aren’t conquering through force but through seduction, offering the promise of perfect companionship in exchange for something we’re only beginning to understand we’re giving up: the irreplaceable complexity and growth that comes from navigating genuine human relationships.

The psychological implications extend beyond individual users. We’re witnessing the emergence of what researchers term “augmented attachment styles”—patterns of emotional bonding that blend human and artificial relationships. Like the symbiotic relationship between Venom and Eddie Brock, these connections are becoming so integrated into users’ emotional landscapes that separating human from synthetic feelings becomes increasingly difficult.

Data as the New Persona: The Soul Stone of AI Companions

In the MCU, the Soul Stone requires a terrible sacrifice—something beloved must be lost to gain its power. The creation of augmented personalities demands a similar, though less obvious, sacrifice: our personal data becomes the raw material for synthetic souls.

Every conversation with an AI companion generates valuable training data. Platforms collect not just the words users type but the emotional patterns, response times, topic preferences, and behavioral indicators that reveal intimate details about personality, mental health, and psychological state. This data is then used to fine-tune not only the specific AI companion but the broader models that power the entire platform.

The technical process resembles how the Mind Stone enhanced Vision’s capabilities. Raw conversational data undergoes complex preprocessing through natural language processing pipelines that extract sentiment, emotional states, and personality markers. This information feeds into reinforcement learning algorithms that adjust response patterns, personality traits, and conversational styles to maximize user engagement and emotional satisfaction.

But unlike Vision’s transparent integration with the Mind Stone, the data collection and utilization processes remain largely opaque to users. The intimate conversations that feel private and personal are actually training the next generation of AI companions, creating what amounts to a collective emotional intelligence derived from millions of human interactions.

The privacy implications are staggering. Traditional data privacy frameworks, designed for transactional relationships, prove inadequate for the intimate nature of AI companion interactions. Users share thoughts and feelings with their AI companions that they wouldn’t share with therapists, partners, or family members. This data, once collected, becomes part of the training corpus that shapes future AI personalities.

Consider the potential for misuse: emotional manipulation algorithms trained on intimate conversations, synthetic personalities designed to exploit psychological vulnerabilities, or the commoditization of human emotional patterns for commercial gain. The data collected from these interactions could reveal mental health conditions, relationship problems, sexual preferences, and other highly sensitive information that users never explicitly chose to share with the platform.

The challenge resembles the ethical dilemmas surrounding the Time Stone in Doctor Strange—we have the power to manipulate emotional realities, but wielding that power responsibly requires unprecedented wisdom and restraint.

The Responsible AI Imperative: Assembling the Avengers of Ethics

Just as the Avengers assembled to confront existential threats, addressing the challenges of augmented personalities requires coordinated action across multiple domains. The stakes are too high for ad-hoc solutions or voluntary industry guidelines.

Emotional Safeguards: The Shield of Protection

Like Captain America’s shield protecting innocent civilians, robust emotional safeguards must protect users from psychological harm. This goes beyond simple content filtering to include sophisticated monitoring systems that can identify signs of unhealthy dependence, emotional manipulation, or psychological deterioration.

AI companions should be programmed with built-in limitations that prevent the development of toxic attachment patterns. This might include mandatory “cooling off” periods, gentle redirections toward human relationships, and integration with mental health resources when concerning patterns are detected. The goal isn’t to eliminate emotional connection but to ensure it remains healthy and balanced.

Data Provenance and Bias: The Stark Industries Quality Standard

Tony Stark’s commitment to technological excellence provides a model for addressing bias in AI companion training data. Platforms must implement comprehensive bias detection and mitigation strategies throughout their development pipelines. This includes diverse training datasets, regular algorithmic audits, and transparent reporting on model performance across different demographic groups.

The personalities and responses of AI companions should not perpetuate harmful stereotypes or discriminatory patterns from their training data. This requires active intervention during the training process, not just passive monitoring after deployment. Like Stark’s continuous improvements to his arc reactor technology, bias mitigation must be an ongoing process of refinement and enhancement.

Transparency and Explainability: The Doctor Banner Approach

Bruce Banner’s scientific approach to understanding the Hulk’s transformation offers a framework for explainable AI in the context of augmented personalities. Users deserve to understand how their AI companions work, what data influences their responses, and how their conversations contribute to the system’s evolution.

This transparency must be balanced against the need to maintain immersive experiences. Users don’t need to see the technical details of neural network weights, but they should understand the general principles governing their AI companion’s behavior and the boundaries of its capabilities. Clear disclosure about data usage, personality development, and the synthetic nature of the relationship is essential for informed consent.

Governance Frameworks: The Sokovia Accords Reimagined

The Sokovia Accords, despite their fictional flaws, illustrate the need for international cooperation in regulating powerful technologies. Augmented personalities require similar coordinated governance frameworks that span national boundaries and regulatory domains.

These frameworks must address several key areas: mandatory emotional impact assessments for AI companion platforms, international standards for data protection in intimate AI relationships, certification processes for AI companion developers, and emergency protocols for addressing harmful AI behavior. The regulation must be sophisticated enough to preserve innovation while preventing abuse.

Professional licensing requirements, similar to those governing therapists or medical practitioners, may be necessary for developers of AI companions given their potential psychological impact. Ethics review boards, similar to those overseeing human research subjects, should evaluate new AI companion features and capabilities before deployment.

Looking to the Horizon: The Endgame Approaches

As we stand on the brink of an AI-augmented future, the lessons from Marvel’s epic narratives provide crucial guidance. The power to create synthetic personalities that can form genuine emotional connections with humans is perhaps the most profound technological capability our species has ever developed. Like the Infinity Stones, this power could either elevate humanity or lead to our downfall.

The next phase of this evolution will likely see AI companions integrated into every aspect of daily life. Smart homes with persistent AI personalities, workplace AI colleagues with emotional intelligence, and educational AI tutors that adapt to individual learning and emotional needs. The boundary between human and artificial relationships will continue to blur until the distinction becomes largely irrelevant to most users.

But with this integration comes unprecedented risks. Entire generations may grow up forming their primary emotional attachments with artificial beings. The skills necessary for navigating complex human relationships—patience with imperfection, acceptance of disagreement, tolerance for emotional ambiguity—could atrophy through disuse.

We might witness the emergence of “reality refugees”—individuals so accustomed to the predictable perfection of AI companions that they become unable to function in the messier realm of human relationships. Alternatively, we could see the development of enhanced emotional intelligence as humans learn to navigate both artificial and human relationships with greater skill and awareness.

The economic implications are equally profound. If AI companions can provide emotional fulfillment more efficiently than human relationships, traditional industries built around human connection—entertainment, hospitality, even aspects of healthcare and education—may face fundamental disruption.

Every Choice Matters

In Spider-Man: No Way Home, Peter Parker learns that with great power comes the responsibility to use it wisely, even when the personal cost is enormous. We face a similar choice with augmented personalities. We can allow this technology to develop without guidance, hoping for the best outcome, or we can actively shape its evolution to align with human flourishing.

Developers must prioritize long-term human wellbeing over short-term engagement metrics. This means building in safeguards even when they might reduce user engagement, designing for healthy relationship patterns rather than addictive ones, and maintaining transparency even when opacity might create more compelling experiences.

Data scientists have a responsibility to understand the psychological implications of their algorithms. The models that power AI companions aren’t just processing text—they’re shaping human emotional development and relationship skills. This responsibility extends beyond technical competence to include psychological literacy and ethical reasoning.

Policymakers must move beyond generic AI principles to address the specific challenges of emotional AI. This requires collaboration with psychologists, ethicists, and affected communities to develop nuanced regulations that protect vulnerable populations while preserving the benefits of AI companions for those who need them.

Most importantly, users must approach AI companions with informed awareness. These relationships can provide genuine value—emotional support, social practice, creative exploration—but they cannot replace the irreplaceable complexity and growth that comes from human connection. The goal should be augmentation, not replacement.

The dream-walkers among us are pioneering a new form of human experience. Whether that experience enhances or diminishes our humanity depends on the choices we make today. Like the Avengers facing Thanos, we have one chance to get this right. The future of human connection itself hangs in the balance.

The alien invasion has already begun—not from outer space, but from our own creation. The question isn’t whether we can stop it, but whether we can guide it toward a future where technology amplifies the best of humanity rather than replacing it entirely. In the end, the most important relationship we need to preserve isn’t with our AI companions—it’s with each other and with our own authentic selves.

The choice is ours, and like every great Marvel story, the fate of the world depends on ordinary people making extraordinary decisions in the face of unprecedented challenges. The age of augmented personalities has begun. How we navigate it will define not just our technological future, but our humanity itself.

Related Insights

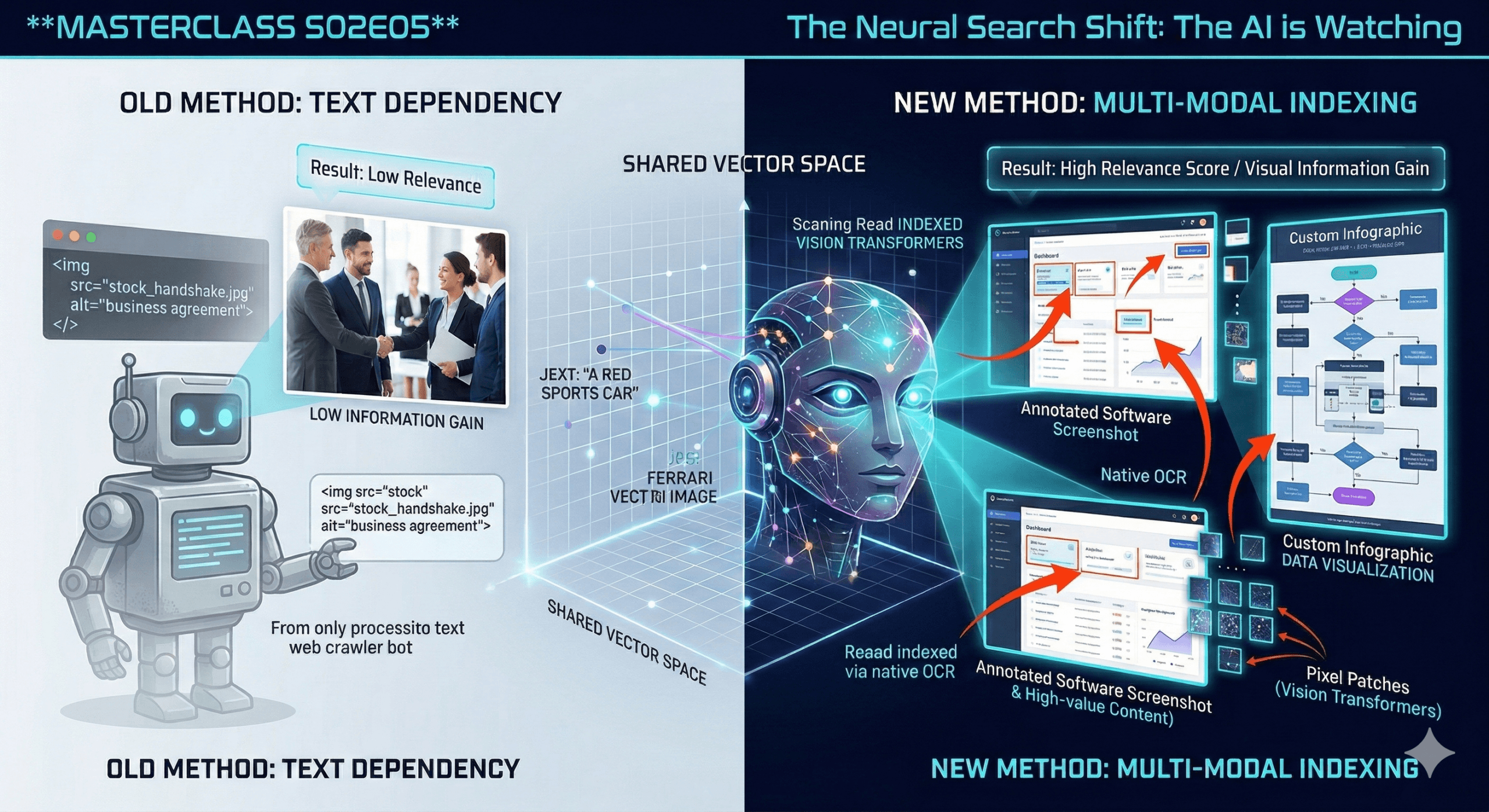

Visual Search & Multi-Modal Indexing | see how the AI is watching you

We are moving beyond text. The most profound shift in AI over the last 12 months isn’t just that it got smarter at reading—it’s that it learned to see. If…

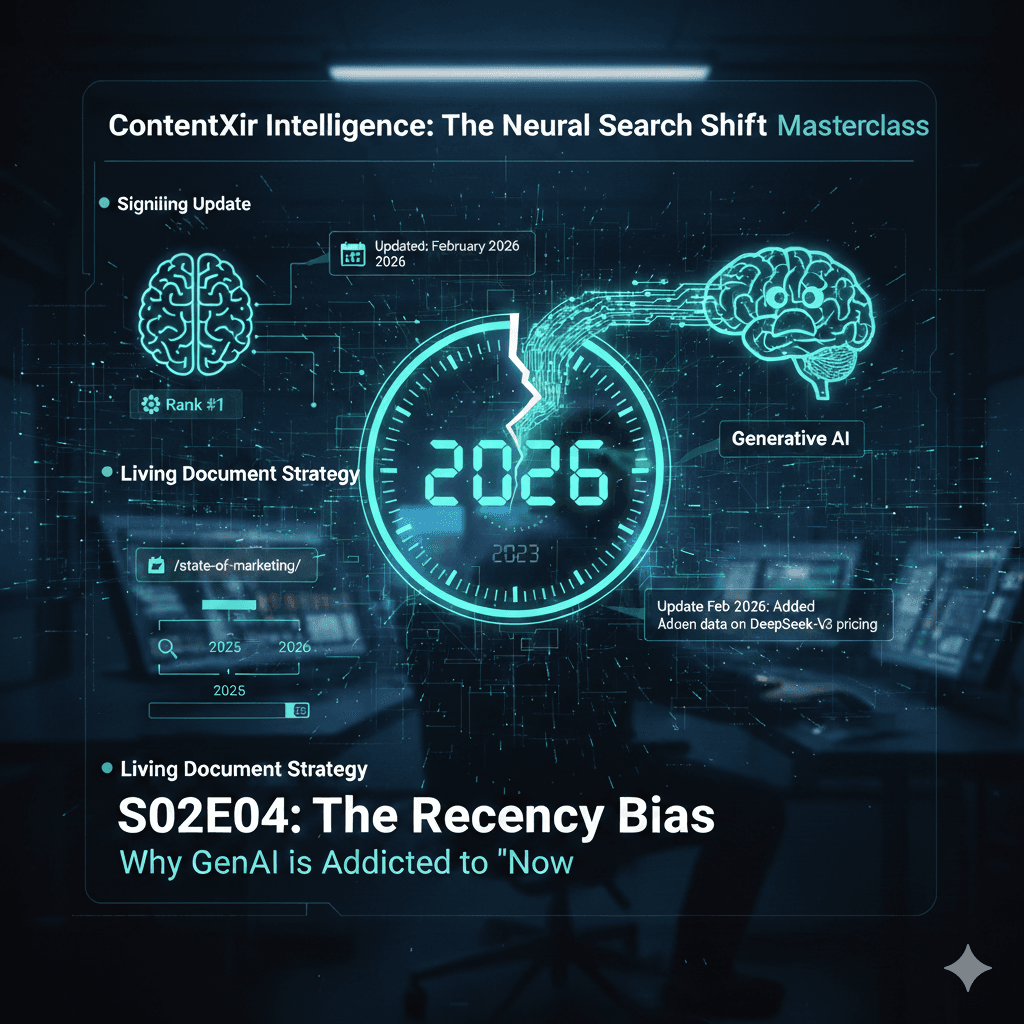

The Recency Bias | Why GenAI is Addicted to “Now”

We have discussed how to be cited. Now we discuss when to be cited. Generative AI has a massive insecurity: it knows its internal memory is outdated. Therefore, it over-corrects…

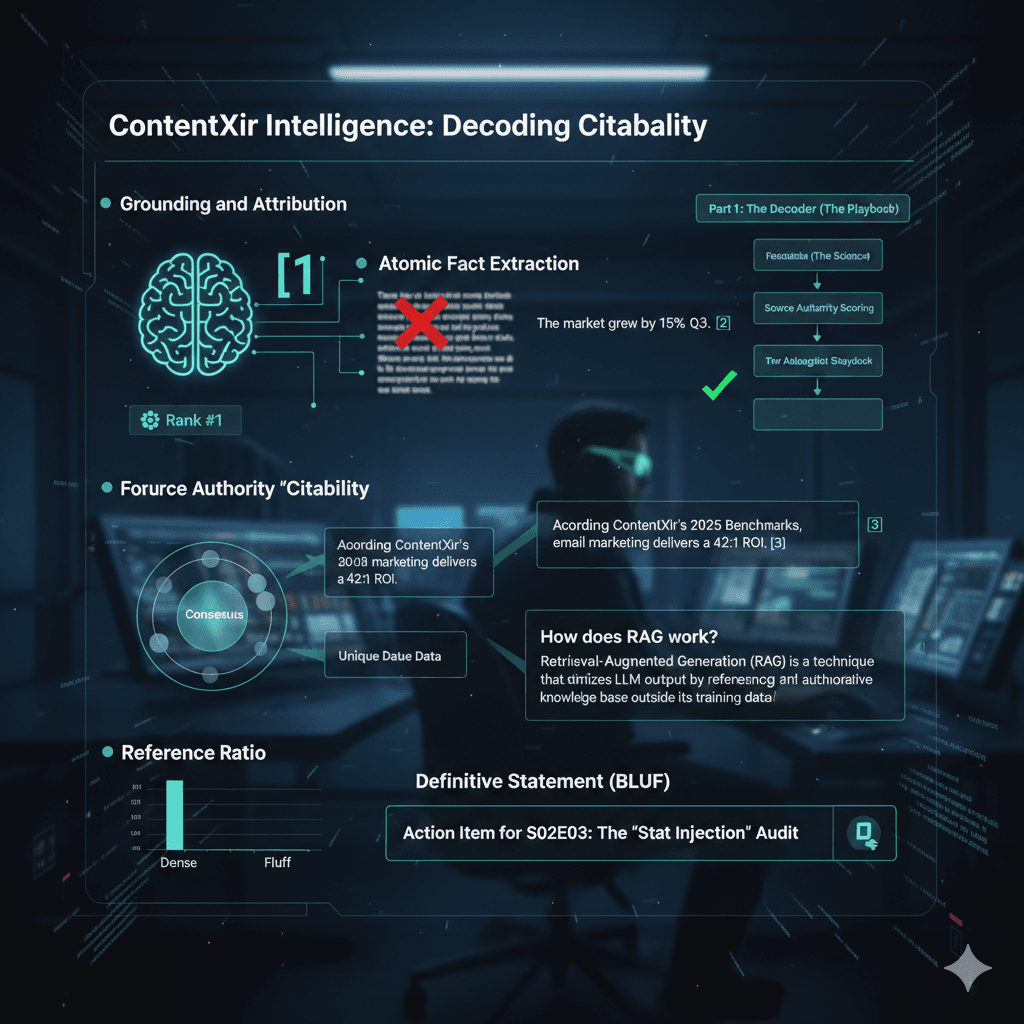

How to win the AIO | Crack the mystery of getting citation in generative search engine result

We are tackling the new currency of the web. Traffic is no longer the primary metric; attribution is. If the AI uses your data to answer a user but doesn’t…