The Infinity Saga of Generative AI: Encoders, Decoders, and the Stones of Language

The world of Generative AI is like the Marvel Cinematic Universe (MCU): vast, interconnected, and powered by incredible forces. At the heart of many of these cosmic-level operations—from translating alien languages to predicting future timelines—are the fundamental components known as Encoders and Decoders. Think of them as the two sides of a crucial mission, working in tandem to process, understand, and then generate entirely new realities (in our case, text, images, or code).

| AI Component | MCU Parallel | Core Function |

| Encoder | The Hulk (Smart Hulk) | Takes raw, complex input and distills its essence, its “context,” into a powerful, dense form. |

| Decoder | Doctor Strange | Takes that distilled essence and uses it to weave a new output, step-by-step, into the desired final form. |

| Context Vector/Latent Space | The Infinity Gauntlet/Stones | The single, concentrated power source that bridges the two halves of the operation. |

1. How Encoders and Decoders Actually Work: The Bifrost Bridge 🌉

The Encoder-Decoder architecture is the core blueprint for sequence-to-sequence tasks, meaning an input sequence (like an English sentence) is mapped to an output sequence (like a French sentence).

The Encoder: The Hulk’s Transformation

The Encoder’s job is to take the raw, messy input—a sentence, a paragraph, or even code—and process it until it captures the complete context or meaning.

- Input Sequence (Raw Data): Imagine a cryptic message from an alien race—the raw input data.

- Tokenization: The message is broken down into smaller, manageable units (words or sub-words).

- Embedding: Each token is then converted into a numerical vector—its “word embedding.” Think of this as giving each word its own unique, multi-dimensional suit of armor. Words with similar meanings have armor that looks numerically similar.

- The Contextual Crunch: This is the most crucial part, often powered by a technology called the Transformer (like a high-tech Stark-designed processor). The Encoder passes these embeddings through its layers, using a technique called Self-Attention. Self-Attention allows the model to look at every other word in the input sequence to understand the context of the current word. For example, if the input is “The Hulk smashed the car,” Self-Attention ensures that the word “smashed” is encoded with the knowledge that the Hulk was the one doing the smashing.

- The Context Vector (The Infinity Gauntlet): After all the layers have processed the sequence and fused the context, the Encoder produces a single, fixed-size, incredibly dense numerical representation. This is the context vector or latent space—the compact, powerful essence of the entire input.

The Decoder: Doctor Strange Weaving Spells

The Decoder takes the powerful Context Vector from the Encoder and uses it to generate the desired output sequence, one unit (or “token”) at a time. This process is autoregressive, meaning each new token generated depends on the tokens that came before it in the output sequence.

- Receiving the Gauntlet: The Decoder receives the Context Vector (the essence of the input). This is its starting point.

- Step-by-Step Generation: It begins its work, usually starting with a special “Start of Sequence” token.

- Attention’s Focus (The Eye of Agamotto): The Decoder also uses Self-Attention (to understand the context of the words it has already generated) and an Encoder-Decoder Attention mechanism. This second mechanism is like Doctor Strange gazing into the Context Vector, allowing the Decoder to focus its attention on the most relevant parts of the original input message as it generates the next word.

- Predicting the Next Word: Based on its own generated sequence and the relevant context from the input, the Decoder predicts the probability of the next word. It selects the word with the highest probability (or uses a more nuanced sampling method) and adds it to the output sequence.

- The Final Sequence: This loop repeats until the Decoder generates a special “End of Sequence” token, completing the mission and delivering the perfectly translated or summarized result.

2. Implementing Byte Pair Encoding (BPE): The Tokenization Tesseract Cube 🧊

Before an Encoder can even begin its work, it needs its raw text broken down into tokens. This process is called Tokenization. In large-scale models, a highly effective and clever technique is used: Byte Pair Encoding (BPE).

BPE is like a smart data compression algorithm turned into a language splitter. It finds the perfect balance between splitting text into individual characters (too many tokens) and splitting into whole words (too many unique words).

The BPE Protocol: The Training Montage

Imagine BPE is tasked with tokenizing the phrase: “Wakanda forever Wakanda.“

- Initial Vocabulary (Characters): Start with every unique character and a special end-of-word marker.

- Iterative Merging (The Power Stone Fusion): This is the core BPE mechanism.

- Find Most Frequent Pair: The algorithm scans the entire corpus and identifies the most frequently occurring adjacent pair of characters or sub-words. (e.g.,

aandnmight appear together most often). - Merge: It treats that pair as a single, new token (e.g., merging

aandnintoan). All instances of the original pair in the text are replaced with this new, combined token.

- Find Most Frequent Pair: The algorithm scans the entire corpus and identifies the most frequently occurring adjacent pair of characters or sub-words. (e.g.,

- Repeat: This process of finding and merging the most frequent pair repeats until a desired vocabulary size is reached. Over time, smaller tokens like

W,a,kwill merge intoWak, and eventually into the full wordWakanda.

Final Tokenization: For a new, unseen phrase, say “Wakanda foreverland,” the trained BPE will look for the longest possible tokens it has learned: [Wakanda, forever, land]. BPE cleverly ensures that new, unseen words can still be broken down into known sub-words, preventing the dreaded “Unknown Token” error that can crash any AI mission.

3. A Simple ML Pipeline: The Assembled Avengers (NumPy + Pandas) 💪

To prove that true power comes from within, we can build a basic Machine Learning pipeline—a simple Linear Regression model—using only the fundamental building blocks of Python’s numerical universe: Pandas (for data handling) and NumPy (for mathematical operations). This is the “Assembled Avengers” approach—no fancy scikit-learn or TensorFlow armors required.

The Mission: Predict Tony Stark’s Next Invention Cost

Phase 1: Data Acquisition and Preprocessing (Pandas/NumPy as SHIELD Agents)

We use Pandas to read and manipulate our data (e.g., a CSV file of previous inventions). We’d use NumPy for any necessary feature scaling or transformation, turning the clean data frame into a pure matrix of numbers.

- Goal: Convert all descriptive data (like “Suit Type”) into numerical form and separate the Features (X) (the variables we use to predict) from the Target (y) (what we want to predict).

Phase 2: The Core Algorithm – Training (NumPy’s Strength)

Our model is Linear Regression, predicting a value based on a line. The model needs to find the best Weight (w) and Bias (b) values to draw that line.

- Initialization: Use NumPy to create an initial, random Weight (w) and a Bias (b). These are the model’s first, wild guesses.

- Prediction (Forward Pass): Calculate the predicted value (y1).

- In English: NumPy takes your input features and multiplies them by the current Weights and adds the Bias. This is just a massive, simultaneous calculation across all data points to get a prediction.

- Loss Calculation (The Damage Report): Measure the error between the prediction (y1) and the actual value (y).

- In English: We calculate the average squared distance between what the model predicted and what the actual answer was. This gives us a single number, the Loss, which tells us exactly how wrong the model is.

- Weight Update (Back-Propagation/The Healing Factor): This is where we tell the model how to adjust its guesses to be more accurate.

- The Gradient: Using NumPy, we calculate the Gradient. Think of the Gradient as the “directional arrow” that points straight down the hill of error. It tells us exactly how much the Weights and Bias contributed to the Loss.

- The Update: We then adjust the Weights and Bias in the opposite direction of the Gradient. We only move a small amount at a time (controlled by the Learning Rate) to avoid overshooting the target.

- Iteration: This entire process—Predict, Calculate Loss, Update Weights—repeats for hundreds or thousands of epochs until the Loss is minimized and the model’s predictions are accurate. NumPy is the engine that executes these rapid-fire numerical adjustments.

Phase 3: Evaluation (The After-Action Review)

Once the training is complete, the final Weights and Bias are used to make predictions on unseen “test” data. We use basic NumPy operations to calculate metrics like the final Root Mean Squared Error (RMSE) to judge the model’s success.

By using only Pandas and NumPy, we have successfully run a full ML mission: Data Prep → Training → Prediction → Evaluation. This is the fundamental, stripped-down power of all AI, revealing the elegant math underneath the cosmic-level complexity.

Conclusion: The Assembled Power of Generative AI

The journey through Generative AI is a Saga built on interconnected brilliance, much like the MCU.

The Encoder acts as the Smart Hulk, processing the raw chaos of input data and distilling its meaning into the dense, powerful Context Vector—our own Infinity Gauntlet of pure information. The Encoder’s use of Self-Attention ensures that every piece of data is understood in relation to the whole, establishing a deep, holistic context.

This power is then handed to the Decoder, the Doctor Strange of the operation, which uses its Attention mechanisms to sequentially and intelligently weave the desired output, word by word, spell by spell. It connects the context of the new creation with the wisdom of the original input.

Before any of this high-level mission can begin, raw text must be prepared by Byte Pair Encoding (BPE)—the Tesseract Cube of tokenization. BPE smartly constructs a versatile vocabulary by merging the most frequent character pairs, ensuring that our AI models can handle the vast, ever-expanding lexicon of human language without crashing into an “Unknown Token” error.

Finally, we proved that the foundational strength of the entire operation lies in the basic, yet mighty, tools: NumPy and Pandas. These are the Assembled Avengers that, without needing complex pre-built armors, can execute a full ML pipeline—from data preparation to the intricate, iterative trial-and-error of training.

Generative AI, in its simplest form, is a two-part mission: Understand (Encode) and Create (Decode). By mastering these foundational concepts and the simple yet powerful tools that underpin them, we move closer to wielding the true, transformative power of this new technological era. The AI Age is not a mystical force, but a masterful application of elegant computation.

Related Insights

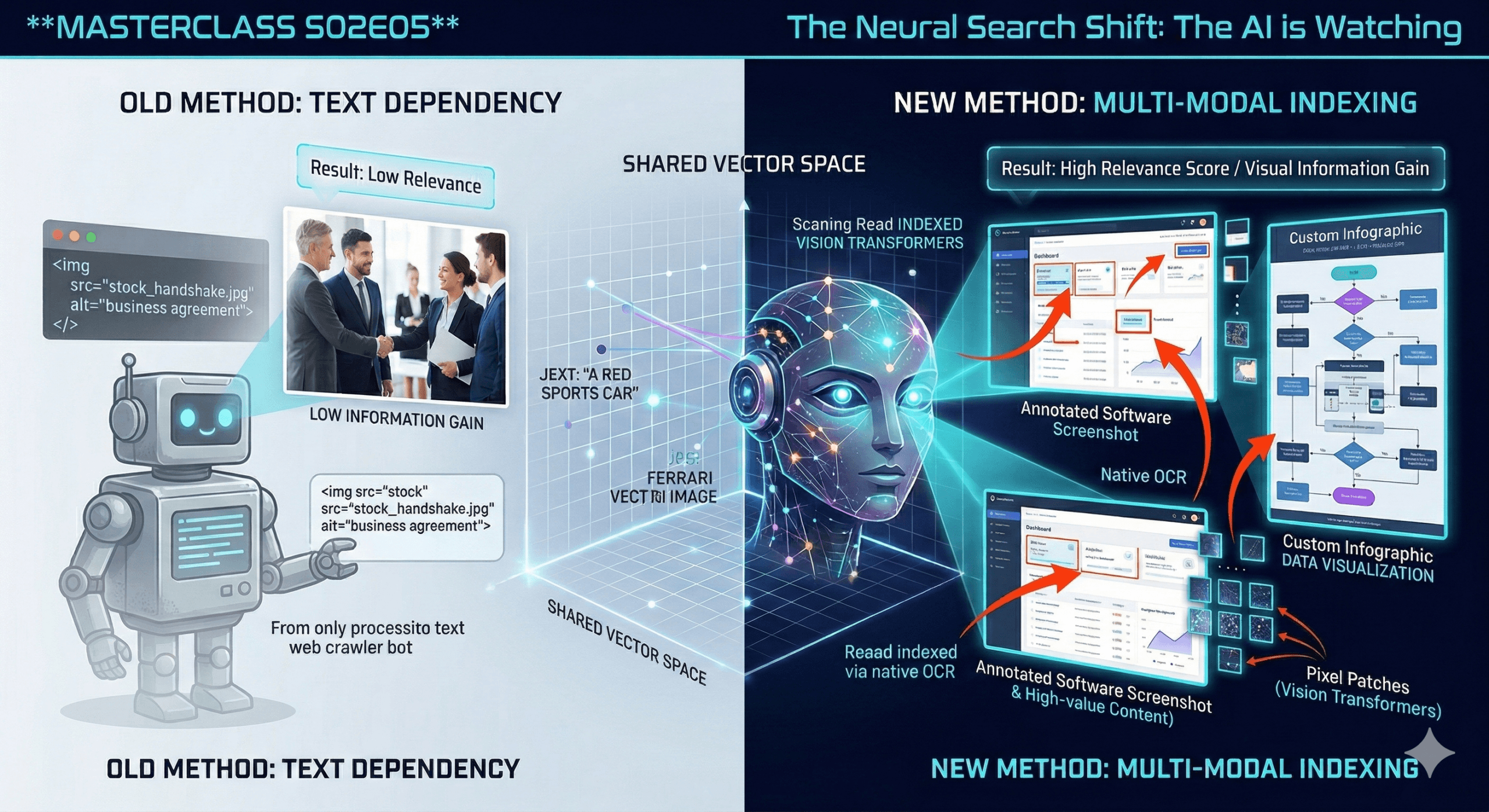

Visual Search & Multi-Modal Indexing | see how the AI is watching you

We are moving beyond text. The most profound shift in AI over the last 12 months isn’t just that it got smarter at reading—it’s that it learned to see. If…

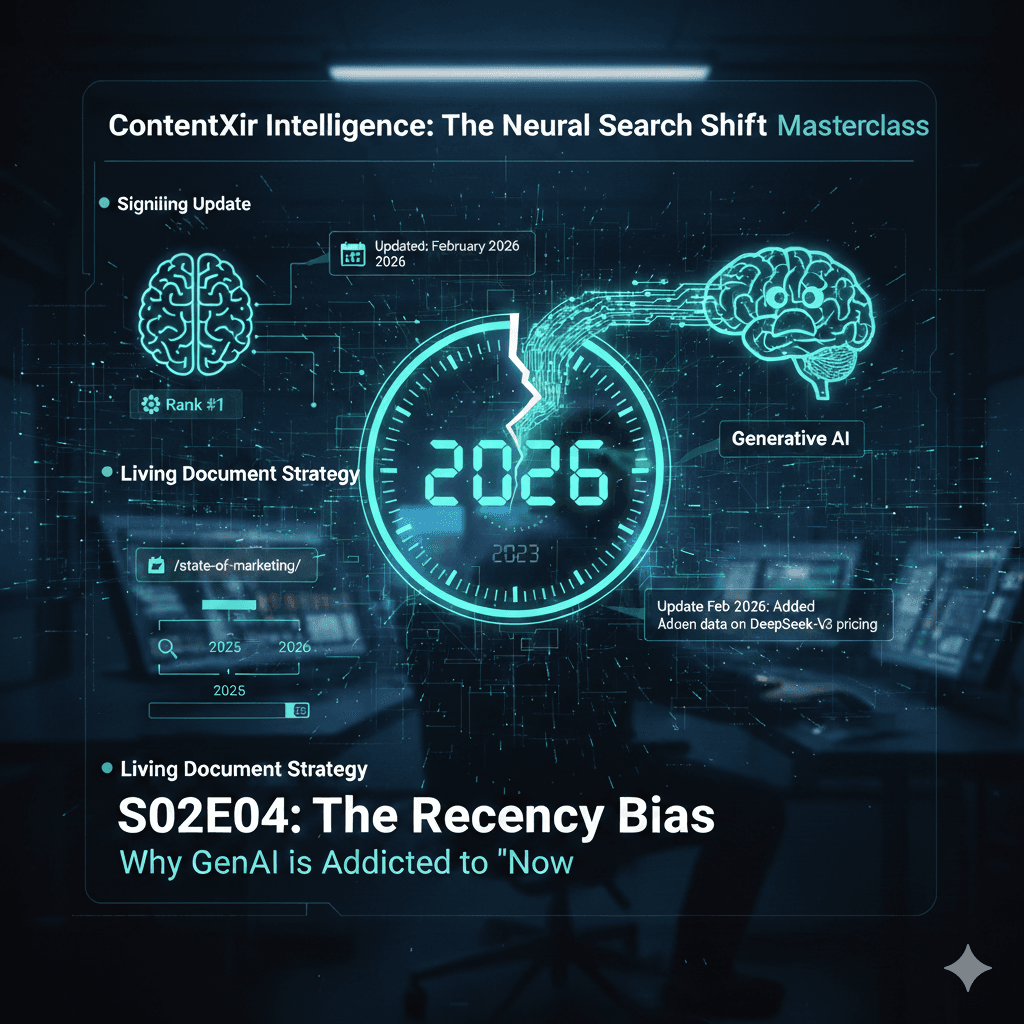

The Recency Bias | Why GenAI is Addicted to “Now”

We have discussed how to be cited. Now we discuss when to be cited. Generative AI has a massive insecurity: it knows its internal memory is outdated. Therefore, it over-corrects…

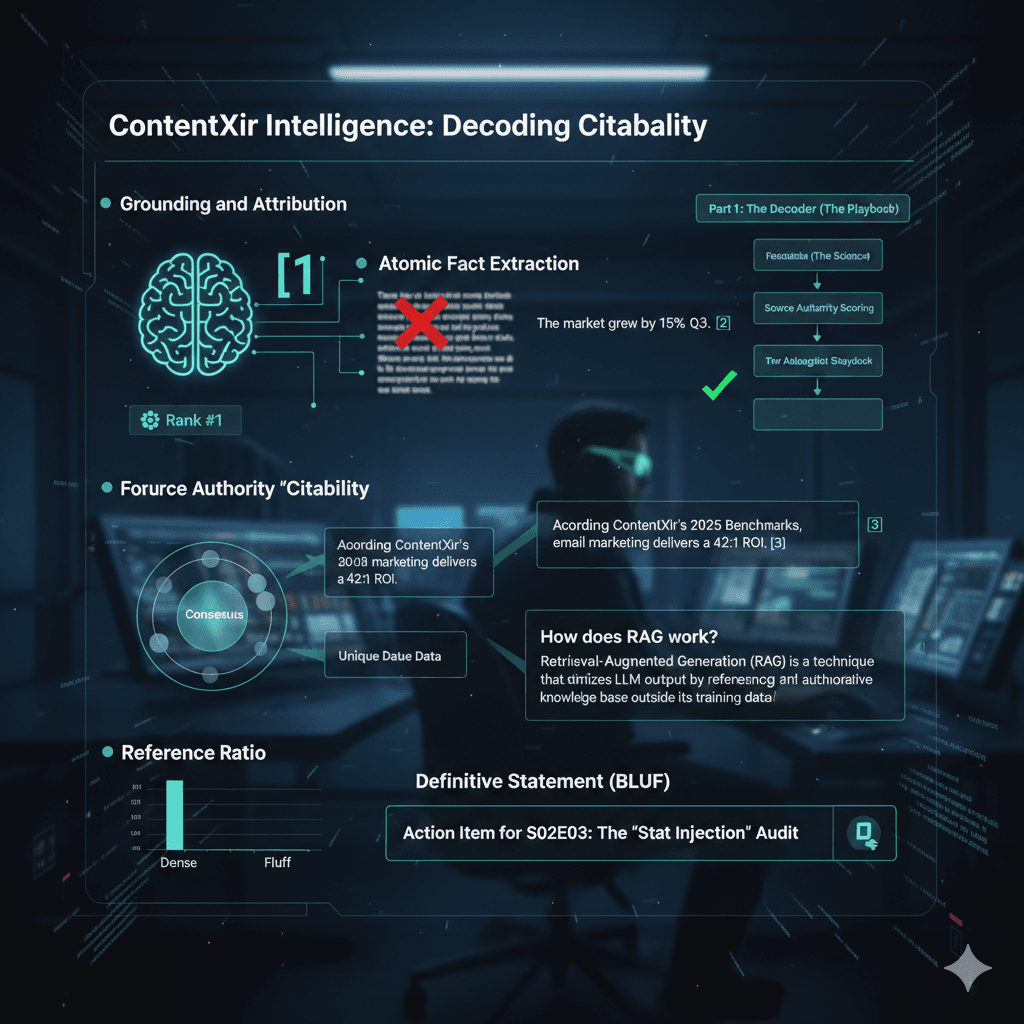

How to win the AIO | Crack the mystery of getting citation in generative search engine result

We are tackling the new currency of the web. Traffic is no longer the primary metric; attribution is. If the AI uses your data to answer a user but doesn’t…