The Neural Search Shift: From Keywords to Context

The Digital Landscape Hasn’t Just Shifted. It Has Evolved.

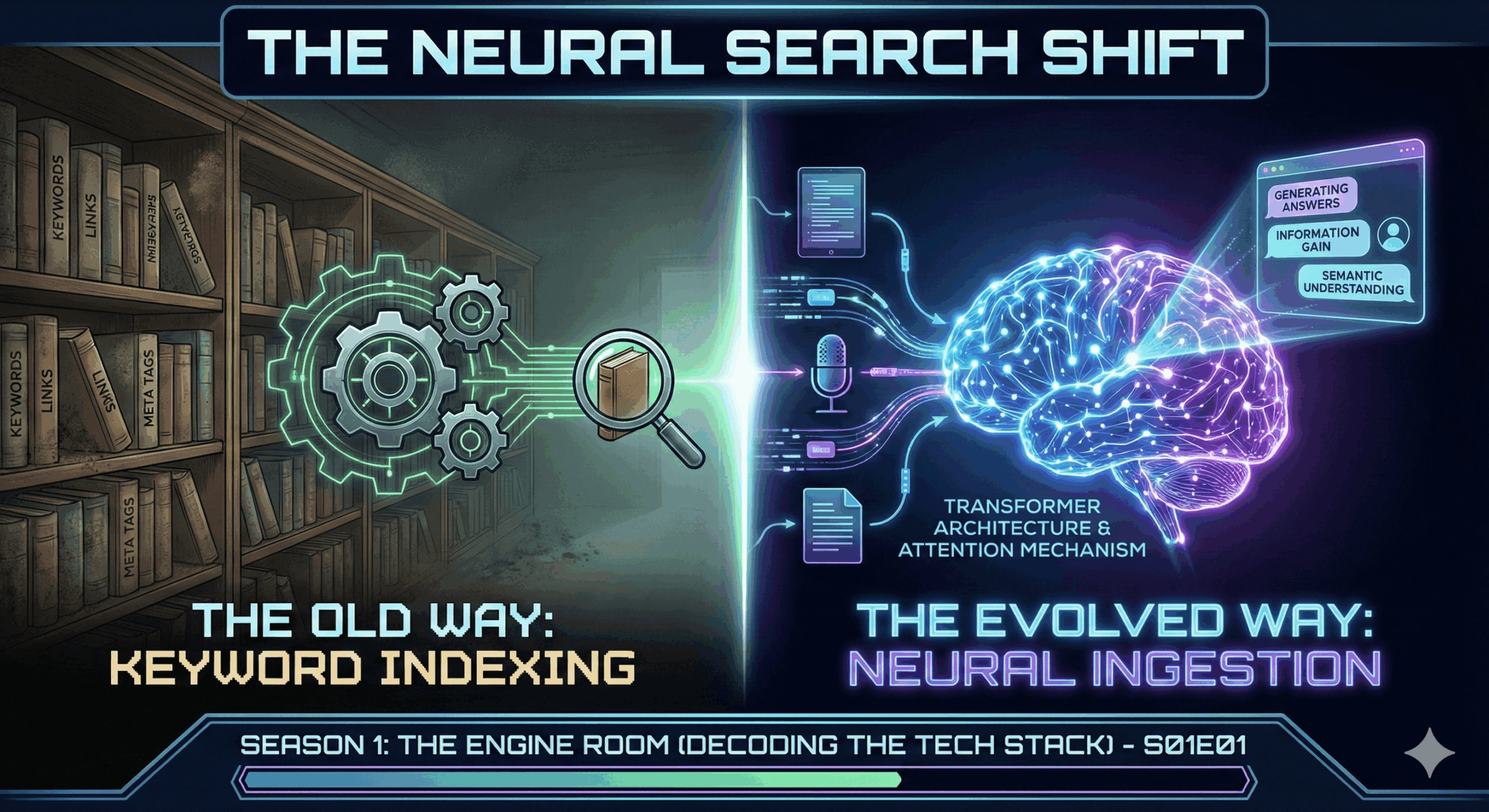

For the last 20 years, we played a game of “matchmaker” with search engines. We matched keywords to queries, backlinks to authority, and meta tags to crawlers. We mastered the rules of the Index.

But the game has changed. We have moved from the age of Indexing to the age of Ingestion.

Search engines are no longer just retrieving links; they are generating answers. They are “reading” your content with the same neural architecture that powers ChatGPT, Claude, and Gemini. They don’t care about your keyword density—they care about your Information Gain.

Welcome to “The Neural Search Shift.”

Over the next 90 days, we are dismantling the “Black Box” of Generative AI. This isn’t just a blog series; it is a Masterclass in Generative Engine Optimization (GEO).

We are breaking this curriculum into 6 Seasons:

- Season 1: The Engine Room (Decoding the Tech Stack)

- Season 2: The Search Mechanism (Indexing vs. RAG)

- Season 3: The Platform Wars (Google vs. GPT vs. Claude)

- Season 4: GEO Protocols (The New Playbook)

- Season 5: The ContentXir Advantage (Data-Driven Strategy)

- Season 6: Future-Proofing (Agents & Multi-Modal)

Every day, we will release a new Episode. We will decode the hard science of Large Language Models (LLMs)—from Vectors to Attention Heads—and immediately translate that complexity into an actionable content strategy you can use today.

Stop guessing how the algorithm works. It’s time to learn how it thinks.

Buckle up. Season 1 starts now.

S01E01: How Transformers Killed the Keyword

For two decades, search engines were librarians—filing books by their labels (keywords). Today, they are professors—reading the books, understanding the plot, and grading your logic. In this premiere episode, we decode the “T” in GPT (Transformers) and explain why the era of “writing for spiders” is officially over.

Part 1: The Decoder (The Science)

How LLMs Actually “Read”

To master Generative Engine Optimization (GEO), you must first understand the brain that powers it. The shift from “finding links” to “generating answers” wasn’t magic; it was a specific breakthrough in computer science called The Transformer Architecture.

The Old Way: The “Forgetful” Reader Before 2017, AI models (like RNNs) read text sequentially—left to right, one word at a time.

- The Flaw: By the time they reached the end of a long paragraph, they often “forgot” the beginning. They struggled to connect a subject in sentence #1 to a verb in sentence #4.

- The SEO Legacy: This is why old-school SEO demanded high keyword density. You had to keep repeating your target phrase to remind the “forgetful” bot what the page was about.

The New Way: Parallel Reality Google’s 2017 breakthrough paper, “Attention Is All You Need,” introduced the Transformer. This model does not read left-to-right. It ingests your entire content block simultaneously.

It breaks your text into data points and processes them in parallel. But its superpower is a mechanism called Self-Attention.

The “Self-Attention” Mechanism Imagine the sentence:

“The animal didn’t cross the street because it was too tired.”

A keyword-based engine sees isolated strings: “animal,” “street,” “tired.” A Transformer asks: What does “it” refer to?

Through Self-Attention, the model calculates the mathematical relationship (or weight) between words. In this sentence, it assigns a high connection score between “it” and “animal.” If you change the sentence to “The animal didn’t cross the street because it was too wide,” the model instantly shifts the weight, connecting “it” to “street.”

The Reality Check: The engine is no longer matching keywords. It is calculating the relationships between your concepts.

Part 2: The Strategist (The Playbook)

Stop Writing for Spiders

If the search engine is now a Transformer that reads for context, your content strategy must pivot immediately. The tactics that worked for the “Librarian” will get you failed by the “Professor.”

1. Kill “Keyword Density” → Adopt “Semantic Variance” Since Transformers process the “whole thought” at once, repeating a keyword (e.g., “Best CRM”) 20 times is now a liability.

- Why: To a Transformer, excessive repetition looks like low-value noise (low entropy). It dilutes your message.

- The Strategy: Use Semantic Variance. Mention your core keyword once, then immediately pivot to related concepts.

- Instead of: Repeating “Best CRM” 5x.

- Do This: Discuss “Pipeline Management,” “Churn Reduction,” “API Integrations,” and “Seat Pricing.” This signals to the Attention Head that you have a comprehensive, high-vector map of the topic.

2. Front-Load Your Context (Designing for “Attention Heads”) Transformers have a finite “context window.” You want the model to assign a high “attention weight” to your content immediately.

- The Strategy: Don’t bury the lead. In your first 100 words, explicitly connect the Subject (Who), the Action (What), and the Outcome (Why).

- Why: Clear, logical sentence structures act as anchors. Ambiguity is the enemy of retrieval in a RAG (Retrieval-Augmented Generation) world.

3. Build “Logic Bridges” GenAI engines (like Claude or ChatGPT) operate on probability. They predict the next chunk of text based on the logic of the previous chunk.

- The Strategy: Use explicit transition words—Therefore, Conversely, Specifically, As a result.

- Why: These words act as signposts. They help the AI parse the logical flow of your argument, making it easier for the engine to summarize and cite you as a trusted source.

ContentXir Intelligence

The “Vector” View: When we analyze content performance at ContentXir, we are moving beyond “Traffic Volume.” We are looking at Concept Clarity.

- Does the data model understand your entity?

- If your content is disjointed or filled with fluff, the Transformer cannot place you accurately in its “Vector Space.” You become invisible—not because you lack keywords, but because you lack clarity.

Next Up on S01E02: Token Economics

Related Insights

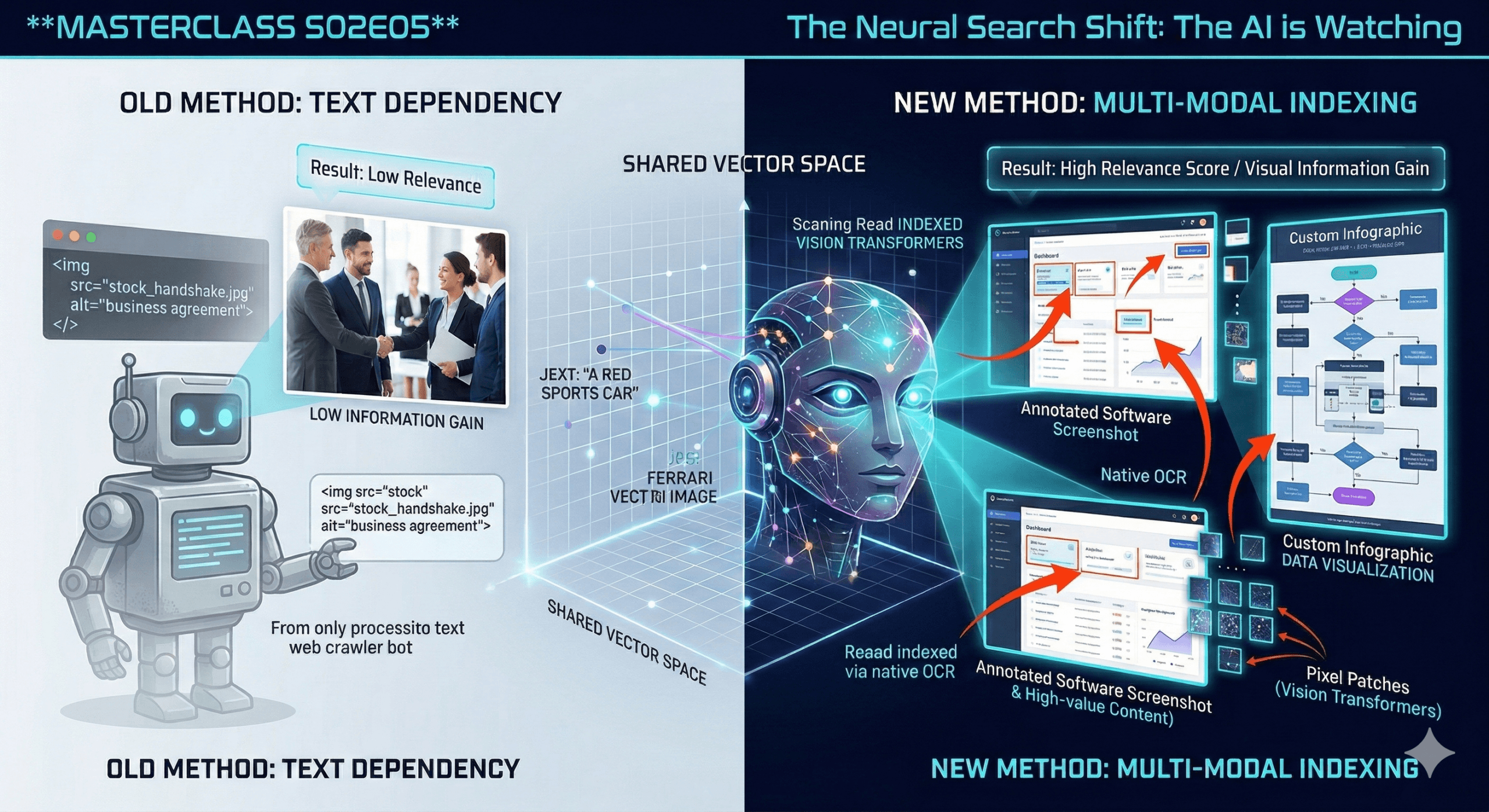

Visual Search & Multi-Modal Indexing | see how the AI is watching you

We are moving beyond text. The most profound shift in AI over the last 12 months isn’t just that it got smarter at reading—it’s that it learned to see. If…

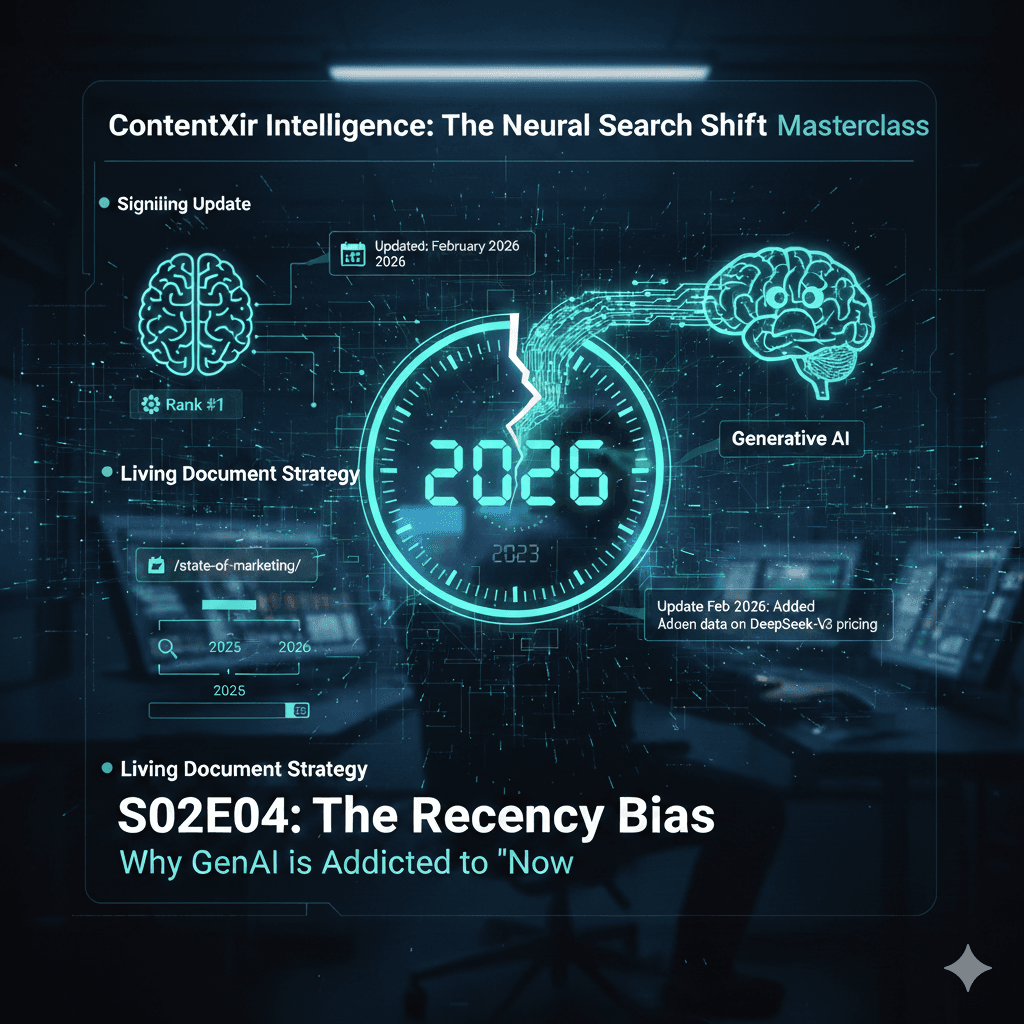

The Recency Bias | Why GenAI is Addicted to “Now”

We have discussed how to be cited. Now we discuss when to be cited. Generative AI has a massive insecurity: it knows its internal memory is outdated. Therefore, it over-corrects…

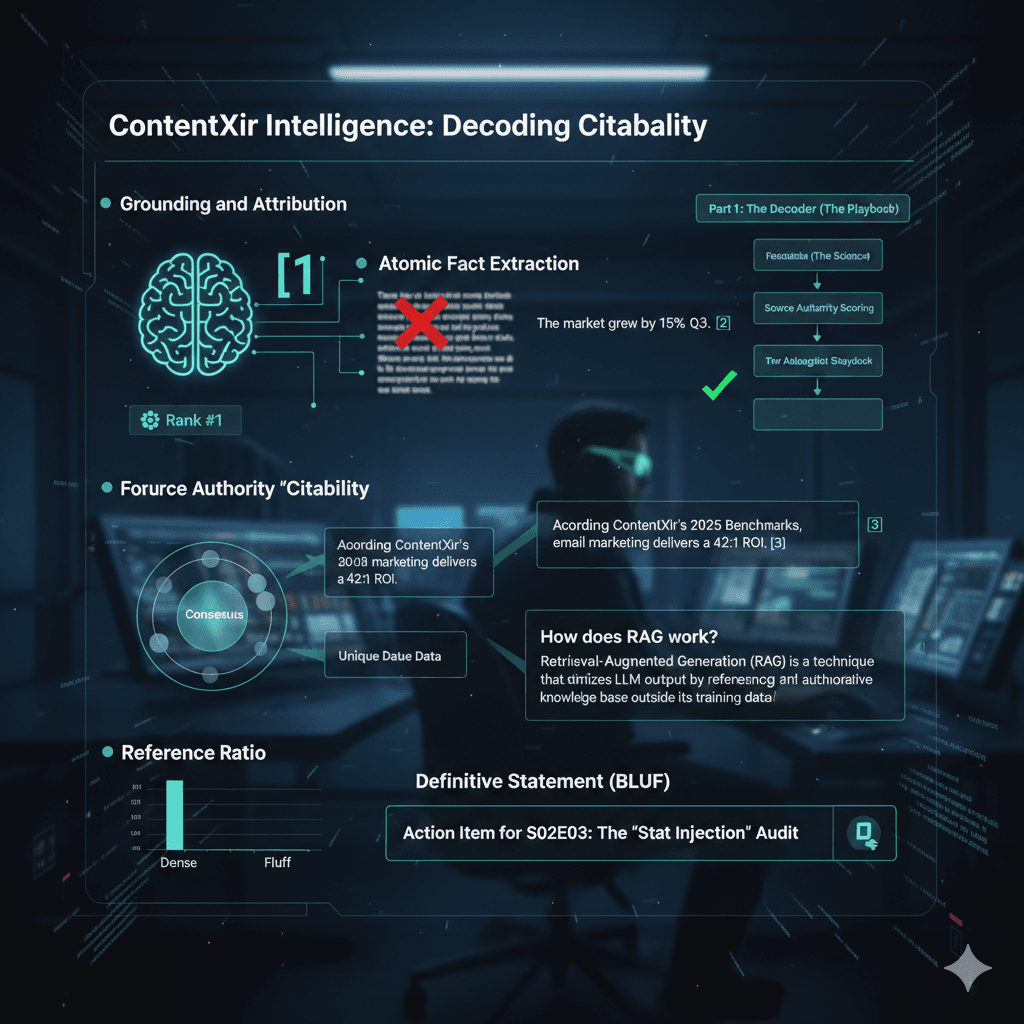

How to win the AIO | Crack the mystery of getting citation in generative search engine result

We are tackling the new currency of the web. Traffic is no longer the primary metric; attribution is. If the AI uses your data to answer a user but doesn’t…