Why AI Lies (And How to Stop It) |The Neural Search Shift

We have discussed the mechanics of how AI reads and thinks. Now we must discuss its biggest flaw: Lying. Or, as the industry calls it, “Hallucination.” Understanding why this happens is key to protecting your brand reputation.

We often treat AI like a database—we expect it to look up a fact and return it. But LLMs are not databases; they are prediction engines. They don’t “know” the truth; they guess the next word based on probability. Sometimes, they get creative. In this episode, we decode the concept of “Temperature” and teach you how to write content that acts as a guardrail against fake answers.

Part 1: The Decoder (The Science)

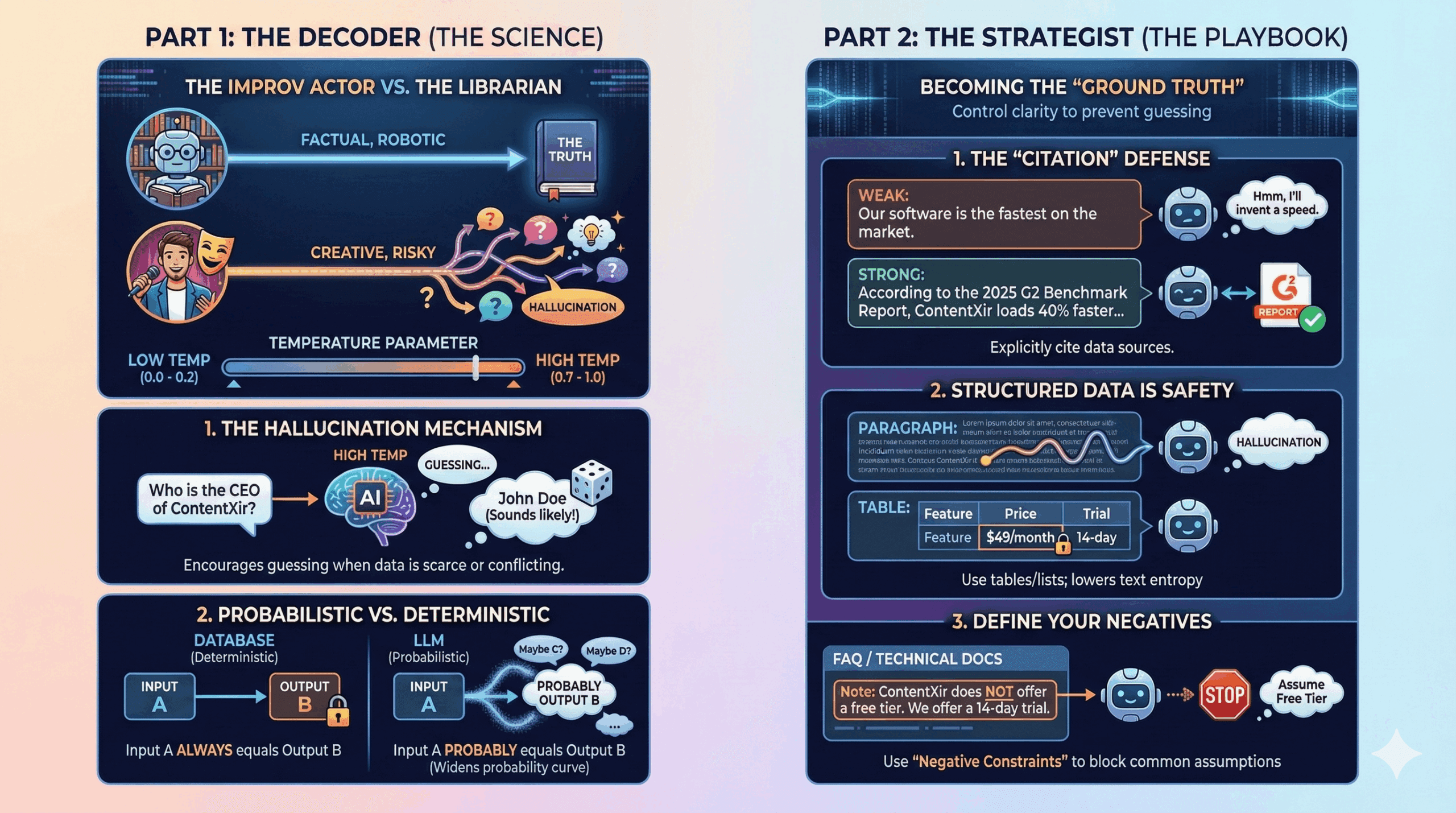

The Improv Actor vs. The Librarian

When a developer sets up an LLM, they control a parameter called Temperature.

- Low Temperature (0.0 – 0.2): The model is strict. It only chooses the most statistically probable next word. It is robotic, factual, and repetitive.

- High Temperature (0.7 – 1.0): The model takes risks. It chooses less probable words to be “creative” or “diverse.”

1. The Hallucination Mechanism Hallucination is not a bug; it is a feature of high temperature.

- If you ask: “Who is the CEO of ContentXir?” and the model doesn’t have enough training data (or if the data is conflicting), a high-temperature setting encourages it to guess rather than say “I don’t know.”

- It predicts a name that sounds like a CEO’s name based on the context of the sentence, even if it is factually wrong.

2. Probabilistic vs. Deterministic A traditional database is deterministic (Input A always equals Output B). An LLM is probabilistic (Input A probably equals Output B).

- When your content is vague, you widen the probability curve. You are giving the AI permission to roll the dice.

Part 2: The Strategist (The Playbook)

Becoming the “Ground Truth”

You cannot control the “Temperature” setting of Google or ChatGPT. However, you can control the clarity of the input. Ambiguity is the fuel of hallucination. Your goal is to write content so clear that the AI has no room to guess.

1. The “citation” Defense Hallucinations often happen when the AI tries to bridge a gap between two ideas.

- The Strategy: explicitly cite your data sources within the text.

- Weak: “Our software is the fastest on the market.” (Subjective. The AI might invent a speed metric to support this).

- Strong: “According to the 2025 G2 Benchmark Report, ContentXir loads 40% faster than the industry average.” (Specific. The AI can latch onto the entity “G2 Benchmark Report” as a ground truth).

2. Structured Data is Safety LLMs are less likely to hallucinate when reading structured data (tables, JSON, lists) because the relationships are mathematically rigid.

- The Strategy: Don’t leave critical specs in a paragraph. Put them in a Table.

- Why: A table cell containing “$49/month” leaves zero room for the probabilistic model to predict “$59/month” without breaking the pattern. It lowers the “entropy” of the text.

3. Define Your Negatives Sometimes you need to tell the AI what you are not.

- The Strategy: Use “Negative Constraints” in your FAQ or technical documentation.

- Example: “Note: ContentXir does not offer a free tier. We offer a 14-day trial.”

- Why: This explicitly blocks the most common hallucination path (assuming a SaaS tool has a free tier) by providing a hard “Stop” token.

ContentXir Intelligence

The “Confidence Score” When GenAI engines generate an answer, they internally calculate a Confidence Score for each token. If the confidence is low, they might trigger a web search (RAG) to verify. If they think confidence is high (but they are wrong), they hallucinate.

Our data shows that “Direct Assertions” raise confidence scores.

- Passive voice and hedging words (“It seems,” “Usually,” “Might be”) lower confidence and invite the AI to override your data with its own training data.

- Be Assertive: “X is Y.” Not “X is typically considered to be Y.”

Action Item for S01E06: The “Fact-Check” Protocol.

- Review your “Pricing” or “Features” page.

- Look for adjectives (fast, cheap, best).

- Replace them with Integers. (400ms, $10, #1 ranked).

- Numbers are harder to hallucinate than sentiments.

Next Up on S01E07: The Season 1 Finale: Training Data vs. RAG

Related Insights

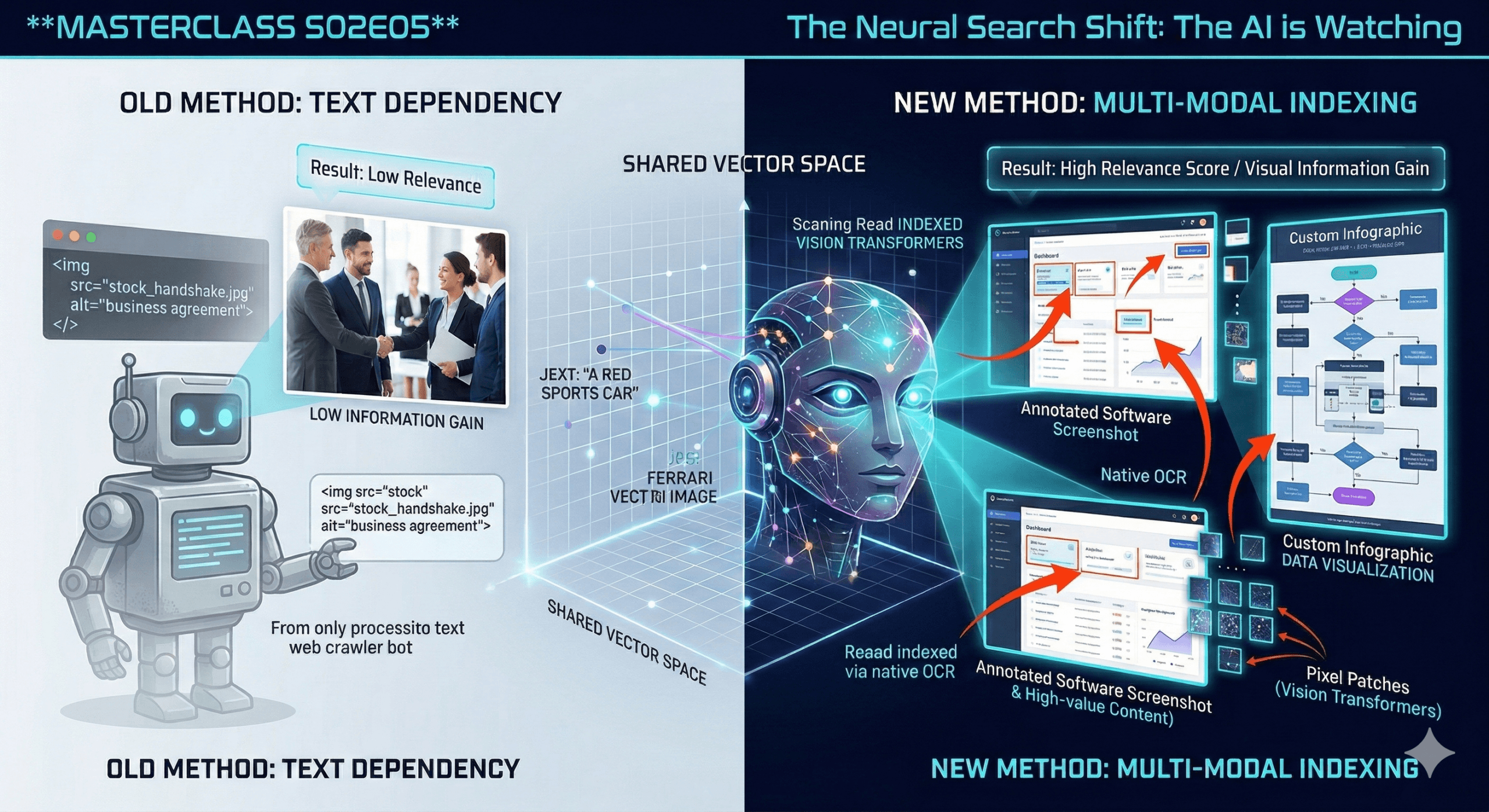

Visual Search & Multi-Modal Indexing | see how the AI is watching you

We are moving beyond text. The most profound shift in AI over the last 12 months isn’t just that it got smarter at reading—it’s that it learned to see. If…

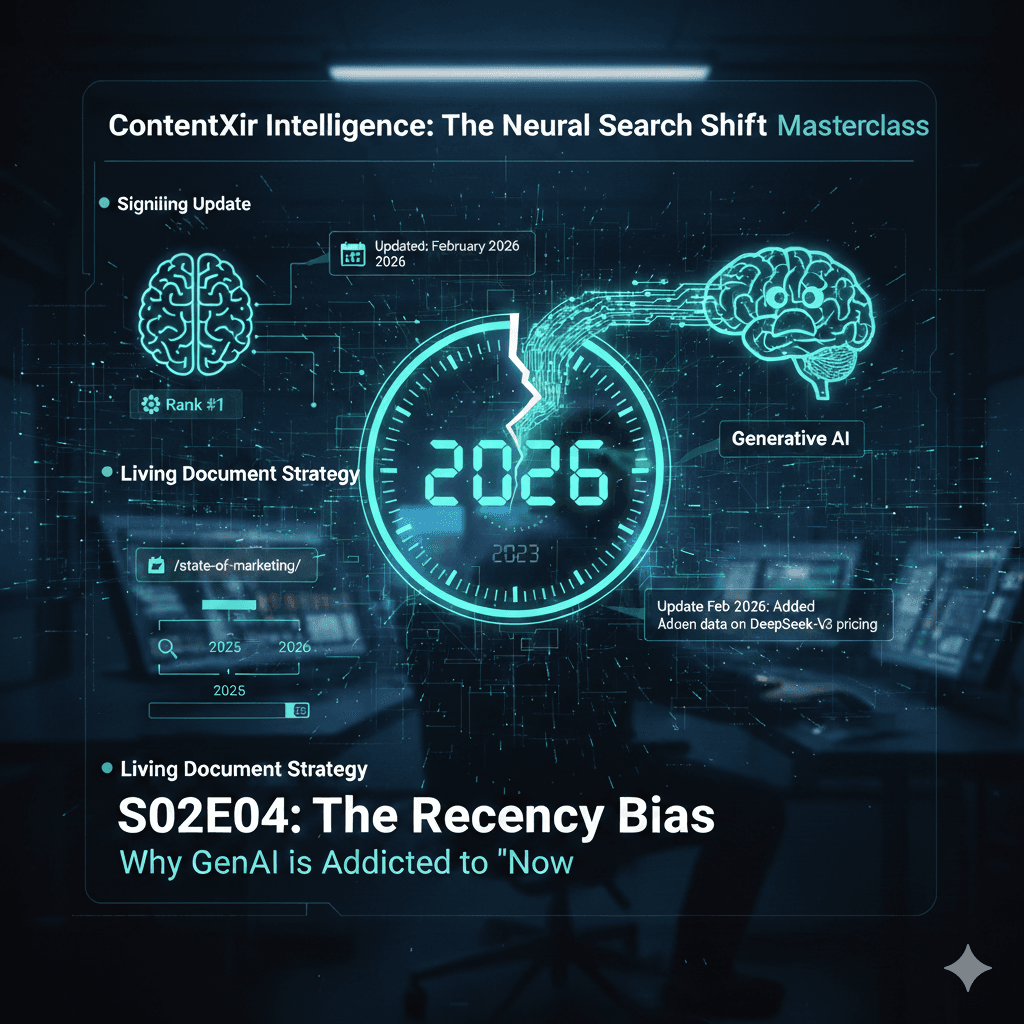

The Recency Bias | Why GenAI is Addicted to “Now”

We have discussed how to be cited. Now we discuss when to be cited. Generative AI has a massive insecurity: it knows its internal memory is outdated. Therefore, it over-corrects…

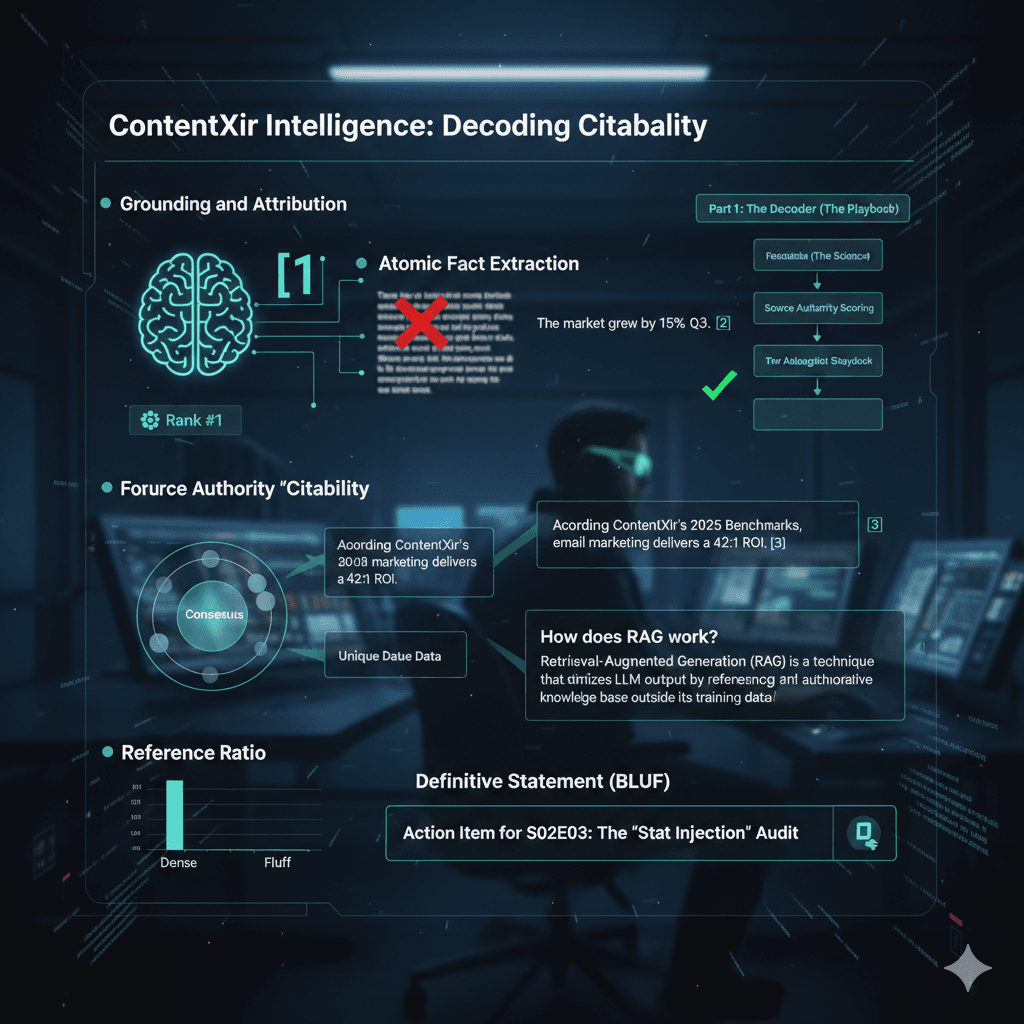

How to win the AIO | Crack the mystery of getting citation in generative search engine result

We are tackling the new currency of the web. Traffic is no longer the primary metric; attribution is. If the AI uses your data to answer a user but doesn’t…